Data-driven assessment reform: How UniSQ is navigating GenAI with evidence, not panic

Jessica Marrington, Chris Zehntner and Jo-Anne Ferreira, University of Southern Queensland

Generative AI’s growing capabilities continue to have a profound impact on higher education. With the evolution of sophisticated AI tools, students have powerful assistive technology at their fingertips. This technology can generate human-like essays, solve complex problems, and write code with just a few prompts. Traditional academic integrity policies banning the use of AI, along with AI use detection tools, are ineffective. The question, therefore, isn’t whether to integrate AI – it’s how to do it authentically and ethically, while ensuring robust mechanisms to assure student learning.

At UniSQ, our focus has been on understanding where our assessment vulnerabilities lie, and developing an approach that enables us to address these, while embracing AI’s potential. Our data informed risk flagging procedure systematically evaluated approximately 4,700 assessment items across the institution. The results provide a roadmap for others but also a bottom-up and evidence-based catalyst for assessment reform.

Beyond gut feelings: The Assessment Heatmap approach

Intuitively, we know that text-based assessments are more vulnerable to unauthorised AI use than say a viva voce, but what is more challenging to identify is where the most urgent change across an institution might be needed. This is particularly relevant when there are numerous assessments of the same or similar types. To guide our decision making, we developed a systematic risk flagging system, combining multiple data sources to identify which assessments were most vulnerable to unauthorised AI use.

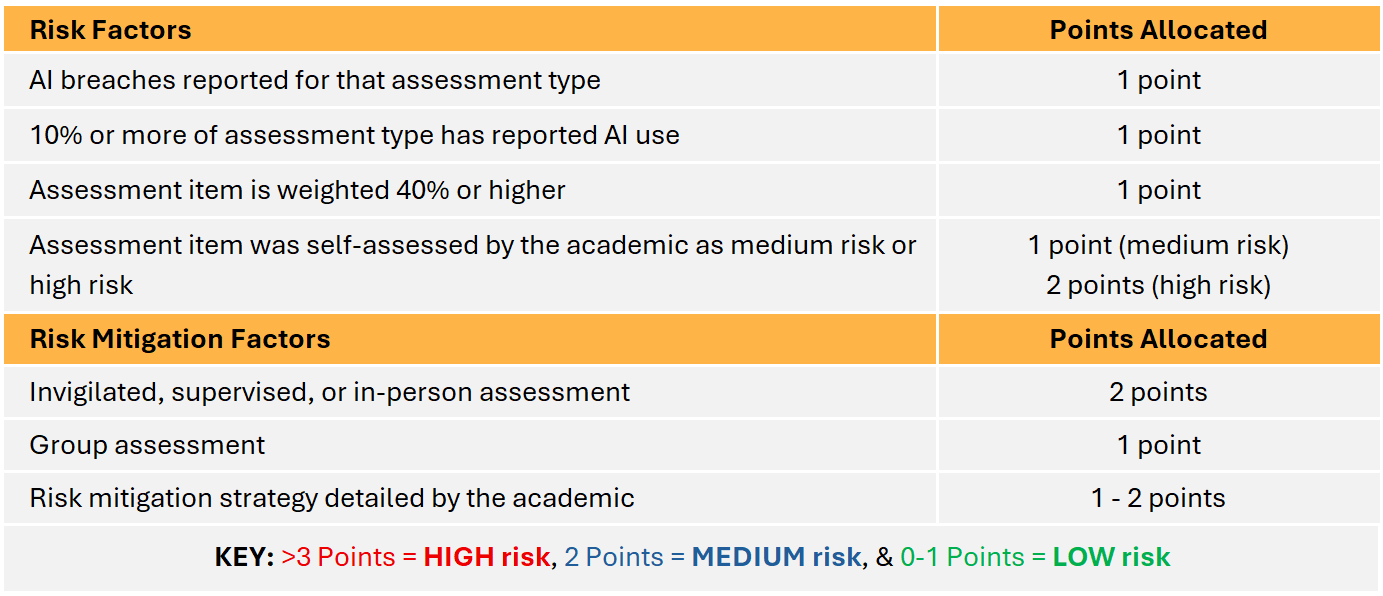

The system, while not without limitations, is simple but pragmatic and builds a comprehensive overview. It assigns points for risk factors while deducting points for mitigation strategies:

For example: A 40% weighted essay with no supervision and low staff-assessed risk scores as a high risk assessment item, while a 10% online forum with no reported AI breaches remains a low risk assessment item. The system provides clear indication for where immediate attention is needed.

The power of visualisation

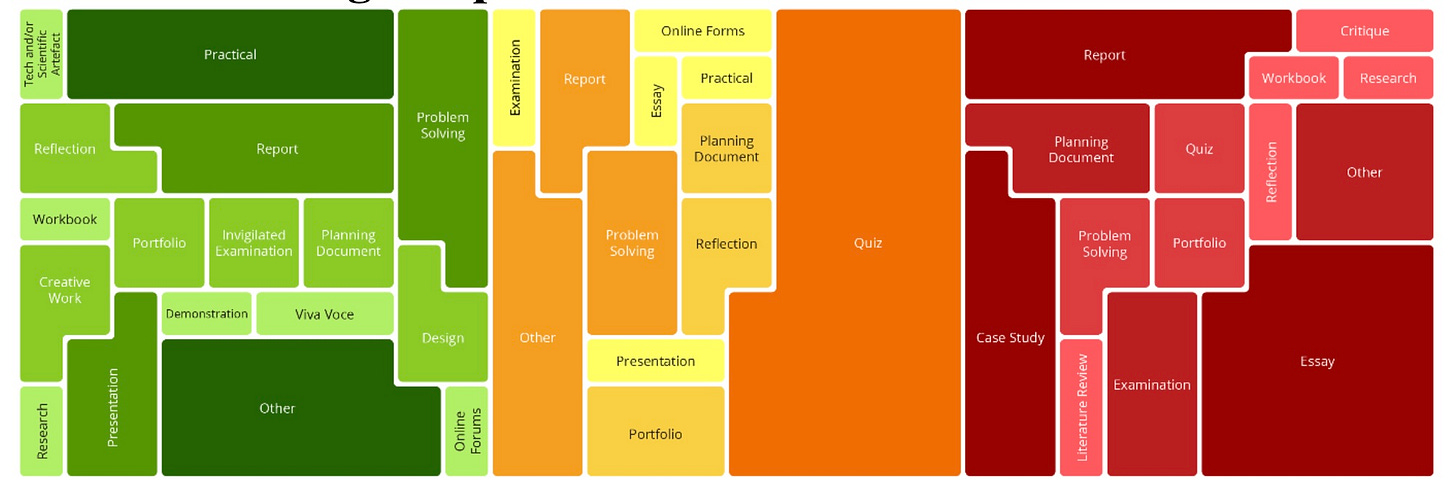

The most striking outcome of our approach is the Assessment Heatmap – a visual representation showing assessment types colour-coded by risk level. Green areas (low risk) include practical work, supervised examinations, and creative tasks. Yellow and orange areas (medium risk) cover presentations, some group work, and planning documents. The large red areas (high risk) prominently feature essays, reports, and case studies – exactly what many educators suspected but may not have been able to quantify.

This visualisation was particularly helpful for staff as it transforms the way that we think about assessment design. As can be seen below, written reports are present in each section, pointing to how assessment design impacts risk.

Opportunity in assessment design: Developing ethical professional practice

To support staff in minimising the risk of unauthorised AI use in assessment, and to avoid duplicating effort, we aligned our strategies with existing curriculum review processes. Our focus was two-fold: what could we do in the short-term; and, what required more time to change. The short-term approach focused on assessment modification within existing course structures, introducing levels of AI use adapted from the AI Assessment Scale (AIAS). Each level includes guidelines about what is permitted and what additional information students must provide. This wasn’t about banning AI– it was about making its use in assessment items transparent and educational.

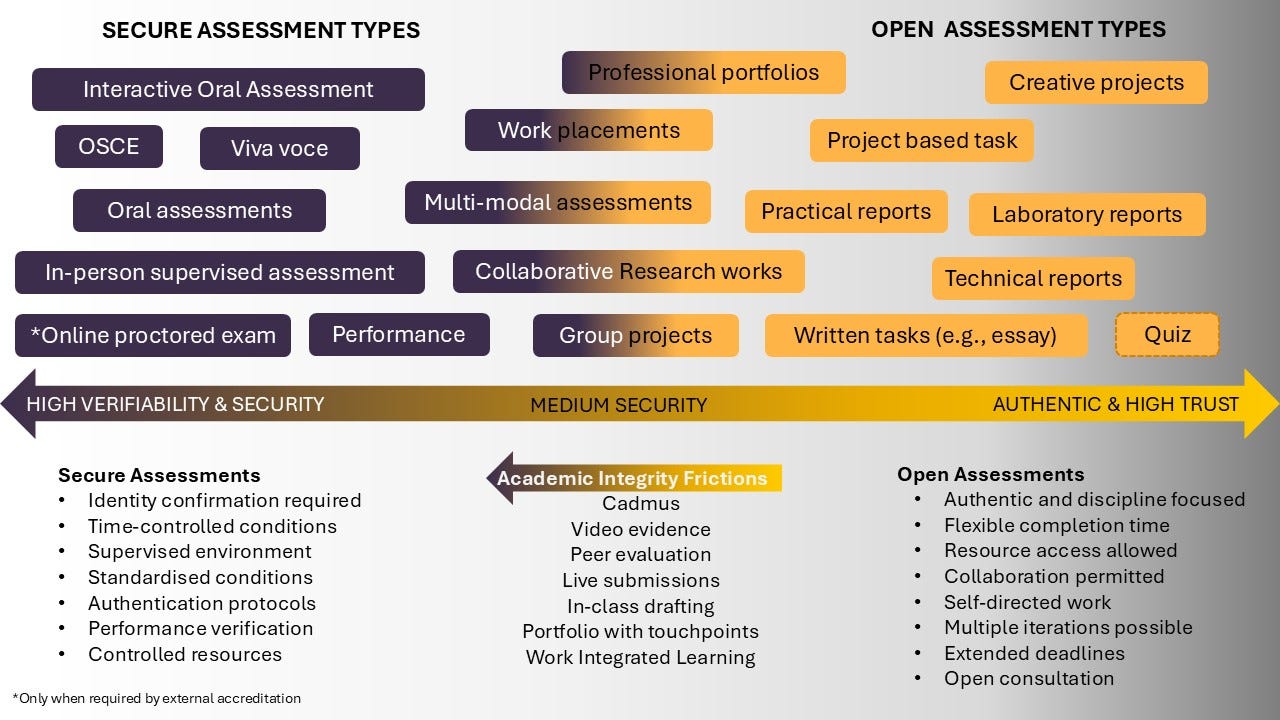

The longer-term transformation is more comprehensive: moving toward a program level assessment approach that includes ‘secure’ and ‘open’ assessment types and, through thoughtful assessment design, achieves appropriate assessment security. Academics are encouraged to see the value in authentic and high trust assessment types but also consider how, through the balanced use of more verifiable tasks and/or introduction of academic integrity ‘frictions’, they can design for security. This is visually represented below.

While assessment security is important, it is not the only goal, as it is through authentic and high trust open assessment types that students are able to practice and master core future professional practice skills, while developing ethical academic practice and ensuring academic integrity.

The collaborative imperative

Key to the project’s success is collaborative implementation. Rather than top-down mandates, we have created multiple engagement opportunities and a co-design culture where solutions emerge from those closest to teaching and learning:

For staff:

AI Pedagogies Project workshops

School-based Assessment Transformation workshops

Weekly drop-in sessions with curriculum designers

Assessment Transformation Toolkit development

Programmatic Approaches to Assessment Working Party.

For students:

‘AI Powerup: Supercharge your learning’ resources

Student Senate consultations and presentations

Anonymous student surveys covering assessment preferences and AI use

Student focus groups on preferred modes of learning.

This collaborative approach recognises that sustainable assessment reform requires buy-in from everyone involved; much more than a mere compliance mindset.

Lessons we learned

Data beats speculation and panic. Systematic assessment of risk provides clear priorities and builds confidence in decision-making. Instead of broad-brush policies, institutions can target interventions where they are most needed.

Integration over prohibition. By moving to an ethical integration approach, we move beyond the false dichotomy of either banning AI or allowing unrestricted access. Students learn to use these tools ethically while developing critical thinking skills.

Balance is essential. The future likely requires both high-security verified assessments and authentic open assessments. The key is designing programs that strategically use both approaches to assure learning while preparing students for AI-supported workplaces.

Change takes time and support. Sustainable transformation requires professional development, academic resources, and ongoing dialogue with both staff and students.

The path forward

As TEQSA’s 2023 guidance on assessment reform acknowledges, different institutions will take different approaches based on their contexts. Our systematic, data-driven methodology provides a replicable model that other universities can adapt. It also aligns well with the thinking set out in TEQSA’s most recent resource released last week – Enacting assessment reform in a time of artificial intelligence.

The Assessment Heatmap Project demonstrates that we don’t have to choose between academic integrity and innovation. Instead, we can use evidence to guide thoughtful integration of AI tools in assessment, while maintaining rigorous standards for student learning.

Jessica Marrington and Jo-Anne Ferreira lead institutional assessment reform initiatives in the Learning and Teaching Futures Portfolio.

Chris Zehntner leads Academic Development in the Academic Affairs Portfolio of University of Southern Queensland.